Expanding the Legacy

Tag: Futurology

1 Post

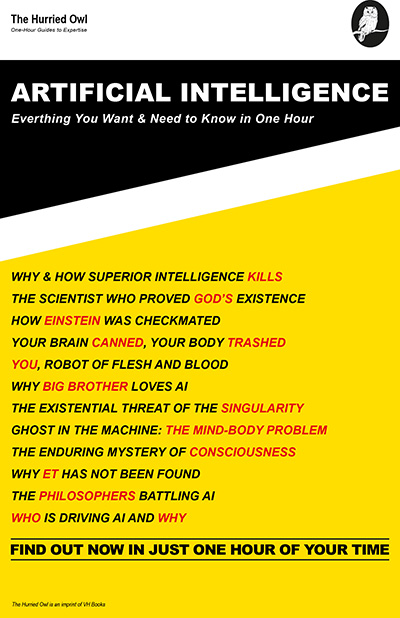

Blowing the Whistle on AI

ChatGPT suffers from artificial hallucination with hints of sociopathy. If stumped, the much-hyped chatbot will brazenly resort to lying and spit out plausible-sounding answers compiled from random falsehoods. Unable to make it, the bot will happily fake it. In May, New York lawyer Steven A Schwartz discovered this the hard...

Just Released

Africa AI Brazil Business Chile China Climate Corona Davos Debt Development Diplomacy Donald Trump Economy Elections Energy EU Europe Federal Reserve Finance France Germany HiFi History IMF Kamala Harris Military Monetary policy NATO Philosophy Politics Putin Russia Schwab Society South Africa Technology Trade Trump UK Ukraine UN US War WEF