Finding, Dissecting, and Dealing with AI Hallucinations

Cannibalistic AI

Artificial Intelligence (AI) is taking over the internet with untold millions of webpages generated by bots at the request of entrepreneurs looking to get ‘money for nothing’ – if only because the ‘chicks’ are not usually ‘free’ for nerds.

It is a great business by any standard. Ask any chatbot powered by a large language model (LLM) to write a few hundred words on any given topic and a webpage is born. No need to hire and pay writers, editors, proofreaders, or any other creative professional. If you’re clueless about the topic, just ask Claude, Pi, or Poe for suggestions. What’s not to like?

However, Silicon Valley has a problem. AI is trained on the internet. In fact, it brazenly steals content generated by humans and paid for by their employers. AI companies, often cutely called ‘startups’ to elicit a measure of goodwill and empathy, dispatch hordes of bots to canvas the internet hunting for images and text to feed their insatiable models. This ‘scraping’ mostly ignores strings of code that asks these visitors not to index a particular domain.

OpenAI’s famous ChatGPT often regurgitates entire newspaper articles whilst Anthropic’s Claude has a penchant for quoting the lyrics of songs it memorised. As AI is not very smart and fails to cover its tracks as it raids intellectual property, the scraping sometimes goes wrong as happened with Stable Diffusion which coughs up copyrighted images, with their watermarks clearly visible, opening a Pandora’s box of litigation.

This is how scraping works (in a nutshell): the gathered data is split into ever smaller tokens – numerical representations of text, images, or sound – which the model then processes to learn how these fragments relate to each other. It does so by trial and error. The more tokens a system processes, the better it is able to respond accurately to user queries.

Fair Use

Casting such a wide net catches much data that is either proprietary or copyrighted. AI evangelists argue, not unreasonably, that using such data to create new output is nothing new and falls well outside the remit of intellectual property law. After all, humans always built and expanded on the efforts and ideas of others to create new work. Ford Motor Company can hardly be blamed, or charged for, using and monetising technology it did not invent such as, say, the wheel.

Craig Peters, chief exec of Getty Images, expressed his frustration during a senate hearing in January: “AI companies are spending billions of dollars on computer chips and energy, but they’re unwilling to put a similar investment into content.” His colleague at Condé Nast (publisher of Vogue and The New Yorker), Roger Lynch agreed and surmised: “Today’s generative AI tools were built with stolen goods.”

Messrs Peters and Lynch touched upon the core of one of many Generative AI conundrums. For training and performance, AI needs two basic ingredients: computing power and data – ideally both. The current shortage of computer chips may be partially offset by feeding LLMs more data which is why AI firms are doubling down on scraping.

Most publishers have not forgotten how advertising revenue was rerouted away from them in the early days of the internet when ‘news aggregators’ poached their content and file-sharing networks depleted their vaults. Most news and current affair websites (including this one) block AI crawlers from accessing their content.

The New York Times, the world’s largest newspaper, went further and is suing OpenAI and Microsoft for infringing the copyright of over three million of its articles. Universal Music Group hauled Anthropic to court for its unauthorised use of song texts whilst Getty Images seeks damages from Stability AI for copying its photos. The three AI purveyors deny any wrongdoing and demand protection under the aegis of ‘fair use’ clauses of US copyright law. They are likely to have a point and a precedent.

In 2015, a US court ruled against The Authors Guild which had sued Google for digitising without its permission an untold number of books to make the texts searchable whilst only displaying small snippets online. The court considered Google’s approach ‘transformative’ and deemed it ‘fair use’.

Dark Art

However, copyright law is notoriously vague and its understanding and interpretation are often likened to a ‘dark art’. Last year, the US Supreme Court ruled that is painting by the late Andy Warhol of Prince – part of a celebrity silkscreen series started in the early 1960s – did not fall under the provisions of fair use. Mr Warhol had used a copyrighted photograph of Prince to produce his ‘Orange Prince’ art piece.

Though the AI startups will likely get away with their appropriation of intellectual property in the US, they will likely find European courts less forgiving. Whilst data-mining is generally allowed though frowned upon, ‘fair use’ exceptions are absent.

The one certain outcome of present and future court cases over the intellectual property that provides AI its nourishment, is that lawyers stand to make a veritable killing.

One growing problem that AI companies must face as they exhaust the internet is that their catch is increasingly generated by artificial intelligence. This leads to data cannibalism and potentially dangerous feedback loops that distort and may even derail AI’s learning curve by compounding and amplifying initial errors.

Epoch AI, a research firm, thinks that in about two years’ time, AI crawlers and scrapers will have exhausted the internet’s stock of human-generated data. Industry insiders speak of a hard ‘data wall’ that will soon be hit and stop LLM development in its tracks.

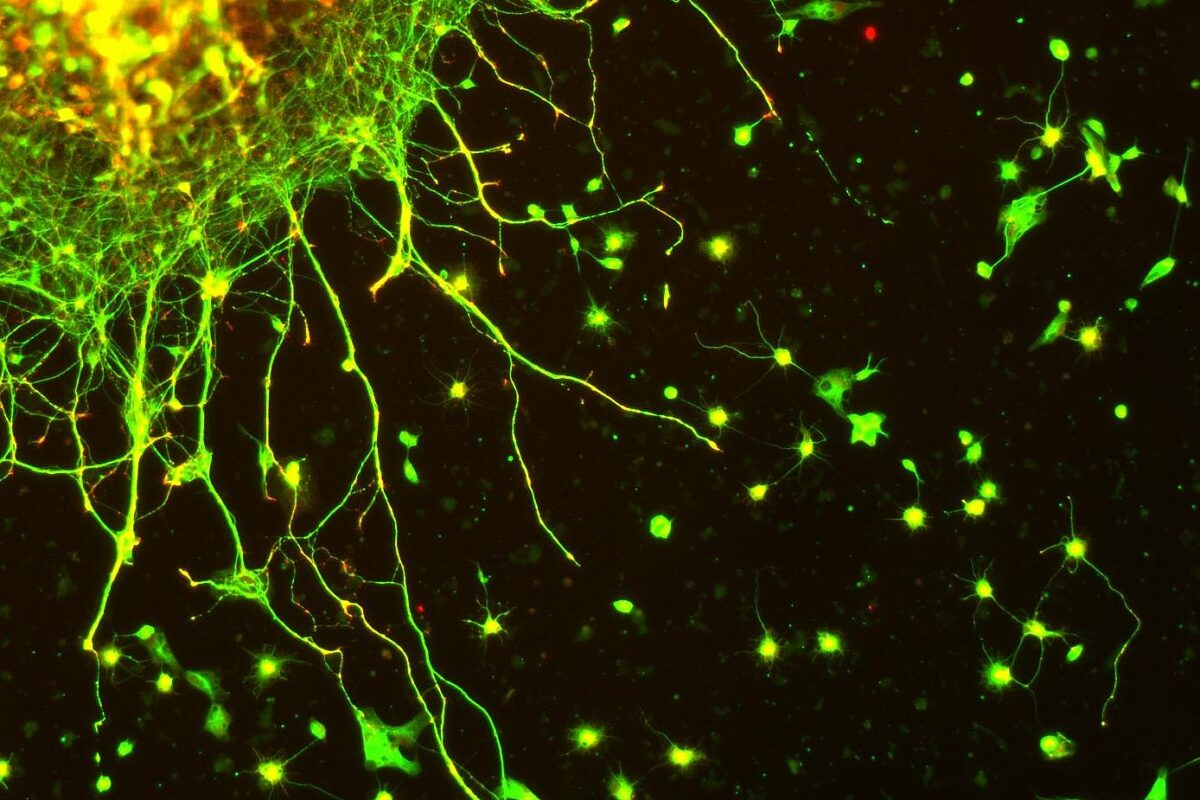

Large language models are grown rather than designed. They use deep learning to get their smarts. The technique simulates in software a network comprised of billions of neurons which is exposed to trillions of samples to detect patterns. These patterns allow LLMs to generate text and images, write code, translate languages, and talk trash. LLMs are structured on the human brain which uses about 100 billion neurons to establish some 100 trillion synaptic connections – instances where neurons connect and swap data.

Black Box

Because they are not programmed, or designed, nobody really understands much about the inner workings of LLMs. Engineers are often stumped when their models spout nonsense or resort to outright lying. These ‘hallucinations’ are the Achilles’ heel of AI and scientists are trying to figure out how to armour plate this weakness.

Tinkering around with Claude, researchers at Anthropic tried a workaround involving the use of a much smaller language model, bolted, as it were, onto Claude. This ‘sparse autoencoder’ can identify and map neuron clusters that fire together on a specific input query. The mapped clusters, called ‘features’, may then be manipulated.

In one rather hilarious experiment, researchers spiked a feature related to San Francisco’s Golden Gate Bridge and, sure enough, Claude soon became obsessed with that bridge, steering all conversation to it. Asked how to spend $10, Claude suggested driving over the bridge, paying toll. Asked to write a love story, Claude obliged with one about a lovelorn car that dreamed about crossing the bridge.

The discovery and mapping of features may be useful in preventing LLMs to engage in technical discussions about the manufacture of weapons of mass destruction or discourage topics such as racism, sexism, etc.

Sparse autoencoders notwithstanding, scientists and engineers did not get any closer to understanding the origins or causes of AI hallucinations. However, some have suggested a surprisingly low-tech workaround: feed a LLM repeatedly the same or similar queries and look for entropy – degrees of inconsistency – in its answers. That entropy can be used to gauge the level of uncertainty of a given LLM. A low entropy would indicate that the answer can be trusted, whilst high entropy would point to an LLM that makes stuff up as it goes along.

A few futurologists consider the enduring mystery surrounding the inner workings of LLMs as a promising sign that artificial intelligence is nearing the singularity, the point at which its capabilities overtake those of the human brain, ushering in an era of ‘trans-humanism’. They derive their unbound optimism from the fact that neurologists too have been unable to unravel the mysteries of the human brain just like LLMs defy dissection.

Cover photo: Edinger-Westphal neurons in culture and stained for Tubulin (green) and Synapsin (red). Imaged with a Zeiss Axioskop 2 FS Plus equipped with a Zeiss Axiocam HRm.

© 2008 Photo by MR McGill